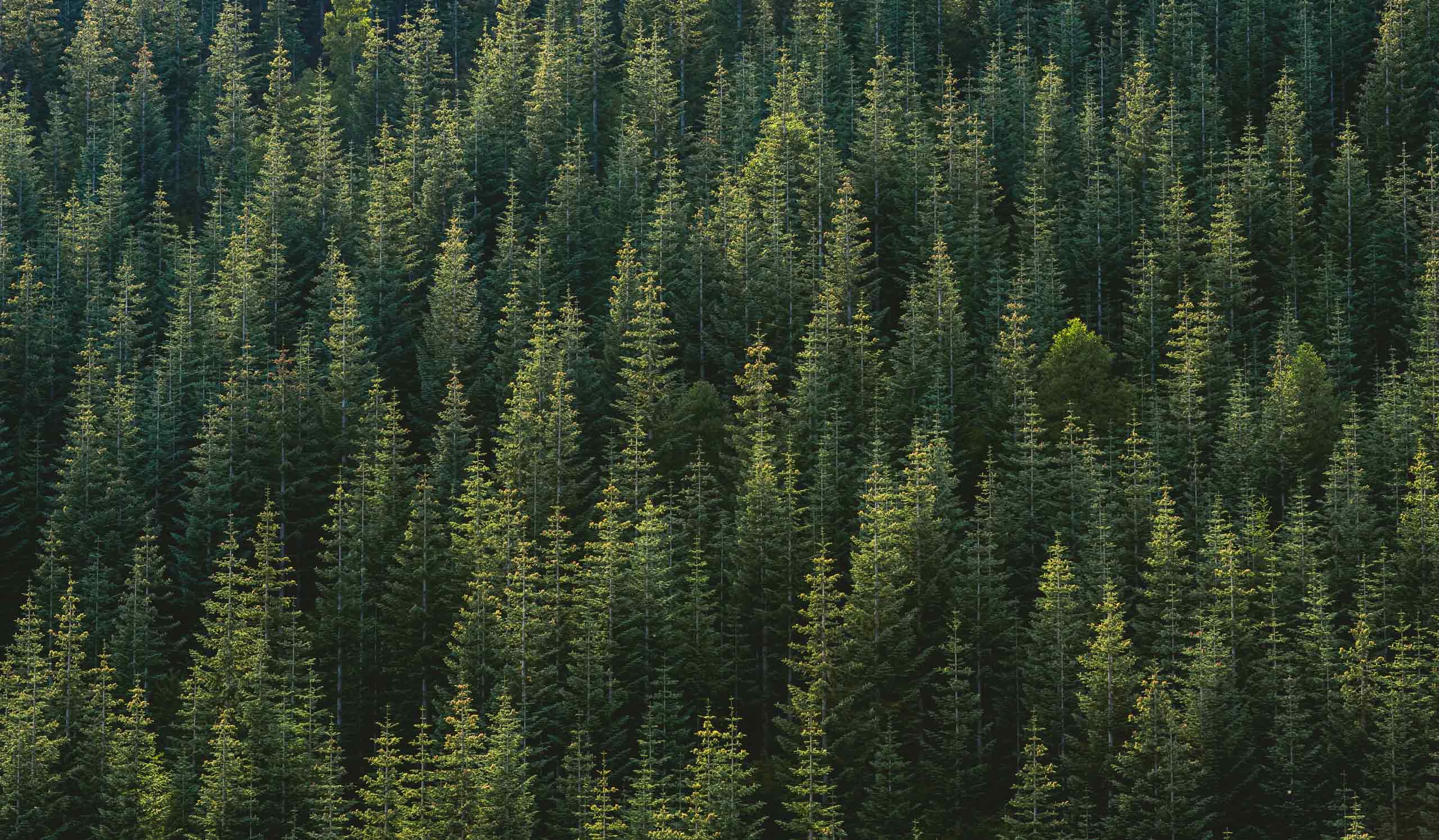

Earth Centred Art & Parenting

Welcome to Sarah Woodrow’s site. A space where Earth-centered art and parenting converge. I embrace the challenges of raising children in a time of collapse and multiple crises. I strive to instill in my children a profound appreciation for nature’s interconnectedness, nurturing resilience and empathy through art and expression. Here is where I share what I learn about regenerative parenting, art and culture with you…